A (Likely) Heterodox View of the AI Landscape

A fairly recent, high profile failure of Google’s Project Gemini and the resulting Sturm un Drang online brought to the fore an all too common misconception — confusing a high profile technological artifact with the field of scientific inquiry it arose from. More broadly speaking, there is lack of common understanding with regards to what is meant by the term “Artificial Intelligence”. This makes discussion of the topic difficult, even within the “AI Community”. In this essay, I attempt to present my own, (likely heterodox) view on Artificial Intelligence as a scientific discipline, an overview of what I see as its major subdisciplines (and their challenges), and what (despite their differences) they may have in common moving forward.

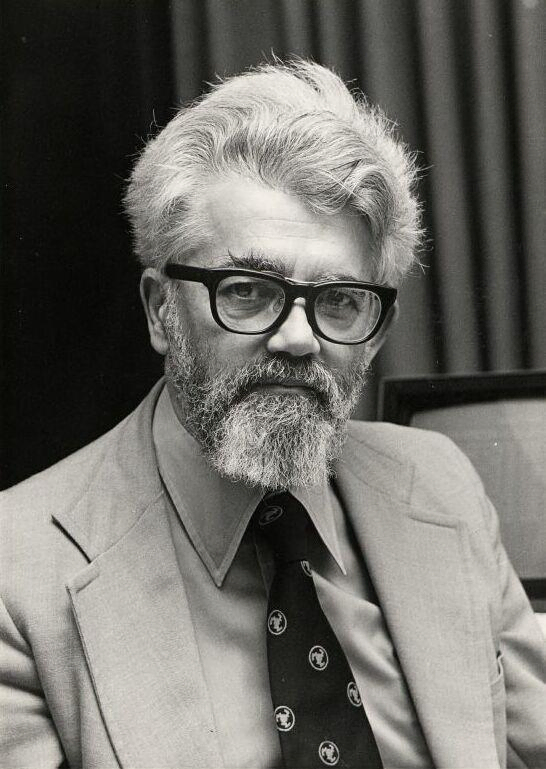

What is Artificial Intelligence?

David Hilbert, 1862–1943.

The term “Artificial Intelligence (AI)” was first coined by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude E. Shannon in a proposal they wrote for a conference at Dartmouth in the summer of 1956. While it’s true that the field was named at this time, I believe that discipline was started almost concurrently with the field of Computer Science as a whole, with a problem posed by David Hilbert in 1928. This problem, known as the Entscheidungsproblem, is formulated as follows:

“Construct a mechanical procedure which given an arbitrary first order formula will determine whether or not it is a tautology.”

In an earlier essay, I detailed my view on how this question spurred Alan Turing, Alonzo Church, Emil Post and others to formulate the concept of an algorithm. This then gave rise to Computer Science as a new field of scientific inquiry focused on revealing the kinds and laws of algorithms (or computable functions) to the faculties of independent reason. To understand how this question is connected to AI, it’s worth spending some time unpacking what the question is actually seeking to accomplish.

In logic, a tautology is a formula (or assertion) that is true under every possible interpretation. Stated in another way, a tautology is a universal truth. When viewed from this perspective, the Entscheidungsproblem is posing a very deep question - can we define an algorithm, which given a first order formula, determine whether or not it is (or represents) a universal truth? The question gains a new level of depth when viewed from the perspective of these scientists, where the belief was that first order logic could be a language for describing mathematics, and capturing mathematical reasoning. While Turing, Church and Post did when they proved that this was not possible, their discovery gave birth to the field of AI by raising the following question:

“What are the kinds of correct human reasoning that can be captured by an algorithm (or computable function), and what are the laws that govern them?”

This is the central question of Artificial Intelligence, and firmly places it in the Western scientific tradition. It also shows us how this view of the discipline relates it to software engineering, which is the application of the scientific principles of Computer Science (or in this case AI) to the design of a technological artifact (such as Gemini, or others).

A Tale of Two Intuitions

Wilhelm Marstrand, Don Quixote and Sancho Panza at the Crossroads, ca. 1847.

Experientially, we can observe that there appear to be distinct categories of correct human reasoning, and this observation explains in part, the divergence of the field into two subdisciplines, which I loosely call Symbolic AI and Numerical AI.

Symbolic AI

John McCarthy, 1927–2011.

In some sense, Symbolic AI can be viewed as being based on the intuition that human reasoning can be seen as a particular kind of internal dialogue, where we progress from one utterance (or thought) to the next in accordance with certain rules which help us discriminate truths from falsehoods. This intuition grounds this branch of AI with mathematical logic.

There are several potential origin points to which one can trace the genesis of Symbolic AI. One potential starting point was the work by Alan Newell, Herbert Simon, and Cliff Shaw on automatic theorem proving. It’s my own view however, that the origins on a philosophical, methodological, and technical level have their start with the work of the late John McCarthy, particularly with his paper, Programs with Common Sense. In it he gives the following proposal for what it means to say that a program exhibits common sense:

We shall therefore say that a program has common sense if it automatically deduces for itself a sufficiently wide class of immediate consequences of anything it is told and what it already knows.

This kick-started a line of inquiry that had several foundational assumptions:

- Correct human reasoning can be categorized into various natural kinds, which in turn obey certain laws.

- These kinds and laws are discoverable through a combination of rigorous introspection and adversarial dialogue.

Early on, McCarthy posited that we could encode human knowledge as a theory of first-order logic (FOL), and that various reasoning tasks could be reduced to either finding the theory’s models, or proving that certain formulae were entailed by the theory. Minsky however countered that FOL was insufficient for this purpose, citing the non-monotonic nature1 of human reason. As FOL is monotonic, this now led to an effort to discover non-monotonic logics which led to the discovery of circumpsciption, default logic, and the logic of answer-set prolog2. These distinct logics, all grew out of different intuitions, which led to an investigative culture in which:

- A representative “reasoning domain” would be presented in the form of a thought experiment (or toy problem). Examples of such experiments are “The Yale Shooting Problem”, “Lin’s Briefcase”, “Nixon’s Diamond”, “The Tweety Problem”, and many others.

- Solutions to these various problems would be put forward using these different formalisms (often before it was known whether they could even be made executable through software).

- These solutions were then discussed in a friendly, but adversarial manner3, with the goals of: studying the distinctions between the formalisms themselves, and developing a deeper understanding for the natural kinds of reasoning tasks they were representative of.

This led to significant discoveries, and even the formation of entirely new disciplines4 within the AI umbrella. Among the many discoveries are: equivalence classes for certain non-monotonic logics; default reasoning as a distinct natural kind; precise formulations of various reasoning tasks (e.g., temporal projection, planning, diagnostic reasoning, and counterfactual reasoning); and even the development of new programming languages and paradigms and their attendant algorithms and methodologies. These discoveries led to some notable successes such as the development of a decision support system for the U.S. Space Shuttle, academic course scheduling, train scheduling, and more. More recently, a new startup by the name of Elemental Cognition, founded by David Ferrucci (of IBM Watson fame), has begun building Hybrid AI systems partly on top of the foundations laid by the Symbolic AI community.

Numerical AI

Unlike Symbolic AI, what’s typically called Numerical AI is based on a different intuition - one based on another form of intelligence we possess - namely the ability to recognize patterns. Consider for example the gift of sight. It’s by no means obvious how or why we are able to quickly distinguish individual objects from each other when we look about the world. The same is true for other forms of patterns in the natural world which we detect experimentation.

The latter mention of experimentation is of particular importance since it ties us directly to the notion of machine learning, and ultimately the large language models that have caused such a stir in recent days. The fundamental idea here, is the notion that via a series of experiments in which we gather data about a collection of parameters of interest and some outcome, we can (ideally programmatically) derive a curve which fits our observations the best. This notion was then coupled with another intuition based on the biological makeup of the brain that shaped the work done by Warren McCullough and Walter Pitts on neural networks. The idea here being that we could model the notion of a computation through the way in which signals are passed through a lattice-like structure of neurons.

To see the way these two are combined, imagine you are learning some sort of physical skill (driving for example). In the beginning, it’s a fairly laborious process. It requires one to develop hand-eye coordination, situational awareness, in addition to learning how to operate the vehicle and the various laws of the road. Each day spent training however, if done with the proper feedback, ingrains within our minds good habits, and counteracts poor ones, until we develop the skill as almost a second nature. The same basic principle applies here - we build a network of neurons that initially represents an “unencumbered mind”, and then by feeding a large sequence of experiments, we in essence mimic our own internal process, until by an adjustment mechanism, the neural network essentially learns the appropriate function (or best-fit curve). Depending on the nature and volume of the experiments, the curve can be a simple polynomial, or a complex probability distribution, and almost anything in between.

A large language model (LLM), is a deep neural network, which is trained on an extremely large dataset comprised of linguistic information (hence the name), and learns a complex probability distribution which captures the following information:

- Given a character $X$, what is the probability that some other character $Y$ follows it?

- Given a word $W$, what is the probability that some other word $Z$ follows it?

- Given a sequence of words of some size, $S$, what is the probability of some other sequence $T$ following it?

- And so on…

On the surface, when we ask a LLM such as Claude we can get results that appear to mimic how an intelligent, knowledgeable person might respond, and in fact appear to be capable of performing (or mimicking) fairly high-level reasoning tasks such as summarization of a given text. This has caused a great deal of excitement throughout the world about the potential of these artifacts.

Do all Roads Lead to Rome?

Despite their successes however, the recent dramatic failure of Gemini and the resulting controversy have raised quite a few questions about what it is that LLMs actually do. It’s worth noticing that the semantics of any of the words or sentences within its training set is absent. Furthermore, the only notion that an LLM has of correctness is based on either positive or negative feedback that’s part of its training data and subsequent interactions. There’s no notion of any inference system inherent in the artifact itself. Similarly for any notions of causality, or first principles. This means, that if we ask an LLM to explain why it gave a particular response, the only meaningful way to understand the resulting answer is that it’s simply the most probable sequence of words which would follow request (even if the sequence is gibberish).

Ok so you may ask “What now?” How do the different disciplines of AI and their corresponding artifacts potentially relate to each other? Why does it matter?

The question of why it matters is answerable by recalling my own definition of artificial intelligence as a scientific discipline that seeks to answer the question: “What are the kinds of correct human reasoning that can be captured by an algorithm (or computable function), and what are the laws that govern them?” It’s my own belief that this question is important not only because it can tell us something profound about computable functions and their nature, but it has the potential to teach us something more profound about our own.

When it comes to how they potentially relate to each other, this is potentially tricky. At least in the short term, I suspect that the ability to develop Hybrid AI systems which combine the best of both disciplines will become increasingly important. In such systems the LLMs can serve as a very large knowledge base which then interacts with a symbolic reasoning system to do higher level reasoning over the data embedded within the LLM, and to provide a means of explaining any answers it gives in a verifiably correct manner.

I also believe there’s a very intriguing scientific question to be asked as well. In the 1960s, the linguist Noam Chomsky posited that there is a deep and surface level structure in human language, with the surface level structures relating to phonetic rules of sound and the deep structure pertaining to a kind of shared meaning or semantics across languages, which are somehow an actual part of our biological minds. I am unsure (not being a linguist) whether the truth of this hypothesis has been established or dispelled, but it raises the question of whether or not there could be something similar with the non-monotonic logics, inference mechanisms, and axioms that have been studied within the Symbolic AI community. That is to say, is there a deep structure of “correct reasoning” that is buried somewhere (potentially biologically) in our minds, that can still be investigated by more thoroughly understanding the nature of all of the connections between symbols in a large language model that has been trained on the best of what we have created as humans?

-

In layman’s terms, a logic is non-monotonic if enables us to withdraw prior conclusions in the presence of new information. ↩︎

-

I am taking some liberties here with naming. Answer-set Prolog, is a programming language that arose from the stable model semantics of logic programs. In some sense the two have become synonymous, particular development of the answer set programming paradigm. ↩︎

-

Some of these discussion are part of the academic record and can be teased out from academic literature. Others however, have been saved for posterity here. ↩︎

-

The fields of knowledge representation and commonsense-reasoning in particular come to mind. ↩︎